One of the most persistent misconceptions about AI is that it replaces human judgment.

In practice, it does something far more uncomfortable:

it exposes where judgment was never clearly defined in the first place.

When AI systems fail, the failure is rarely “bad intelligence.” It’s usually unclear expectations, inconsistent decision rules, or silent assumptions that were never written down.

Where AI Breaks Things Open

AI forces questions that many organizations avoided for years:

- What does “good” actually look like?

- Who owns decisions when outcomes are uncertain?

- Which errors are acceptable — and which aren’t?

- Where does human review actually matter?

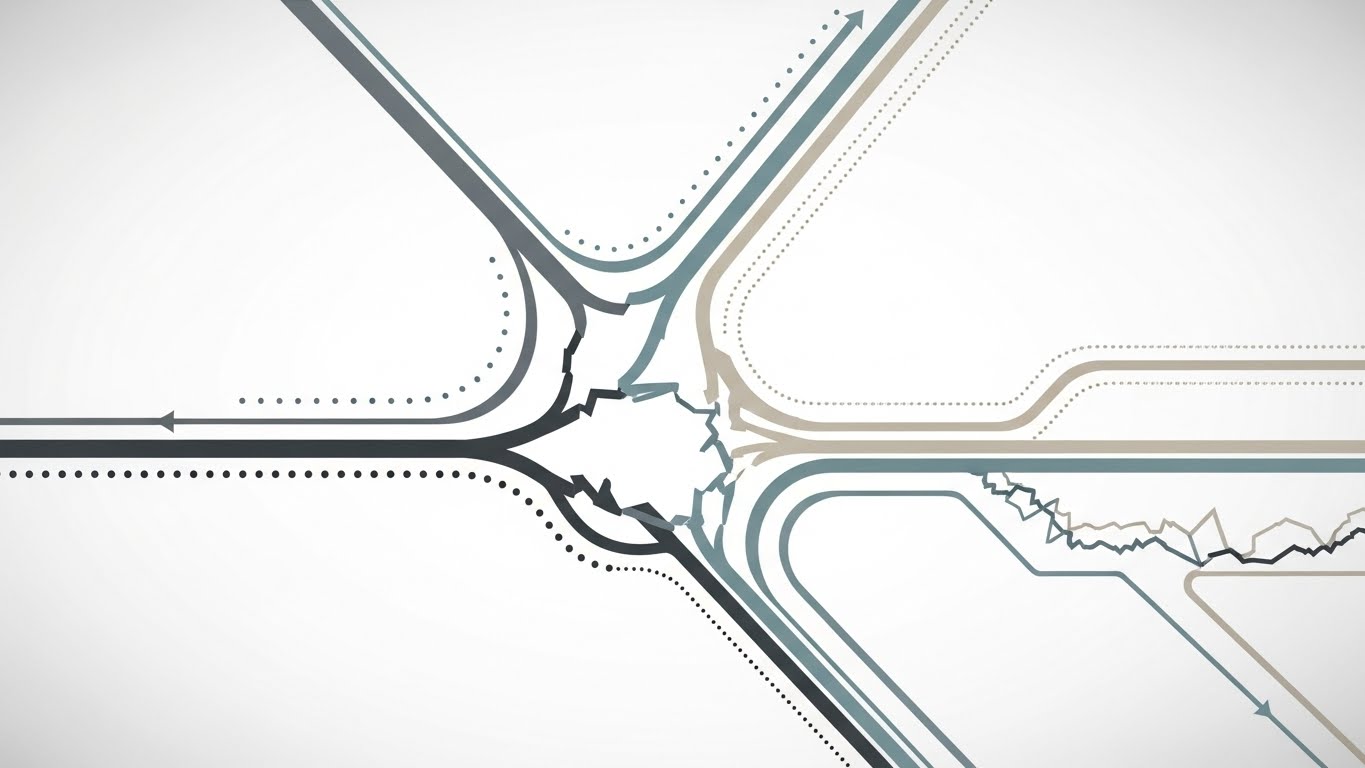

If those questions don’t have answers, AI doesn’t smooth the process. It destabilizes it.

Why This Feels Like a Regression

Teams often interpret this exposure as a failure of the technology.

In reality, it’s a failure of structure.

AI systems require explicit thinking. They demand clarity about intent, thresholds, and boundaries. That rigor can feel uncomfortable in environments that relied on informal judgment or tacit knowledge.

The Practical Shift

Successful teams don’t treat AI as a replacement for judgment.

They treat it as a forcing function.

They define decisions more clearly.

They isolate high-risk areas.

They design review paths intentionally.

The result isn’t less human involvement — it’s better human involvement.

AI doesn’t remove judgment.

It insists that judgment finally be taken seriously.